| [home] [introduction] [types] [applications] [future] [about] |

| Introduction |

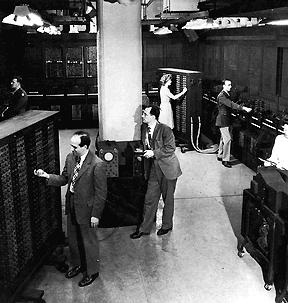

The Evolution to Parallelism In our humble first year opinions, we feel it is necessary to explain how parallelism has come about. Parallel computing is believed by many (though not all!) to be the next form of computing. A Brief History of Computers In the 19th century, Charles Babbage conceived a child that would revolutionize the world. Unfortunately for him, this revolution would not occur for another another 60 years or so. He created what he called the "difference engine". It was basically a mechanical calculator of extremely good accuracy. Due to large expense only a fraction was ever completed and the computer was briefly forgotten. In the next century as Nazi hordes swept across Europe, back in the United Kingdom, codes were being cracked and created for the use of the military. A man named Turing was crucial to cracking these codes. He used primitive valve computers developed at the time to help crack the codes. The Allies won the war and computers finally had important recognition.  By the 1960's governments had large computers using transistors. Rich companies also possessed these devices. Computers were now much used but due to the expense, there was usually only one per company, certainly not one per user. In the mid 60's IBM (International Business Machines) rose to prominence. They created lower cost mainframes, central computers with an operating system, multiple programming languages and magnetic media capable of holding several megabytes. Many programs could be supported in this environment at the same time. The operating system effectively "juggled resources". IBM now meant "computer" to the majority of people. Throughout the late 1960's and 1970's advances in technology produced the integrated circuit and solid state memory. This meant that the size and cost of computers dropped but the performance increased. These new computers were called mini computers and often, several computers would be distributed throughout the company. This led to atype of computing known as departmental computing. The types of computers in organisations became divided into mainframe or decentralised mini computers. As each department could now have a computer of its own, computing became more mainstream as different fields could apply the technology.  By the late 1970's and early 1980's a new form appeared. The personal computer, devised nearly at the same time by Apple, Altair and others, was at first an oddity, as no useful applications were available, but the arrival of certain applications such as personal databases and spreadsheets meant that companies could buy lots of these personal for the same price of a single mainframe and could then have every individual in a department working on a project at the same time. This, funnily enough, could be said to be a form of "manual" parallelism. As the 1980's turned into the 1990's mainframes have been replaced by cheaper yet more productive networks of computers, with operating systems capable of sharing resources such I/O devices and also setting aside personal user space. [picture of PC?] This has allowed a vastly more productive environment. However, the speed of a single chip will, within the next decade, reach a peak as the size of the transistors in an integrated circuit start feeling the tight grip of uncertainty at the quantum level. So to keep increasing power an answer must be found this fundamental problem. The answer is believed to be parallel computing. |

| [home] [top] |

Why is Parallelism the Next Stage? Parallelism is the process of performing tasks concurrently. Now if you only wanted to do one action ie add 2 to 3 there are no real benefits in attempting to do this in parallel. But almost all applications require relatively complex processes. If you had to use a single processor to compute a given task A, then a task B etc there usually could only be one task in progress at once. However, using a processor for each task and then arranging the data flow correctly one could greatly speed up the overall process. When we describe The simple fact is that sharing workload usually gets jobs done quicker. For a real life example imagine a house being built. If one man built a whole house he would have to lay foundations, then build the walls, build a roof and so on. He can only perform one task at once. Two men could build the foundations together in less time, then the walls (again in less time), then both could work on the same thing or split their efforts on separate projects (because the dependant parts are done). Anyway, the point is that however you arrange it the whole job takes less time. As some jobs depend on others, a parallel processor can be structured in several different ways ie there is no point having several processors waiting for a particular job if there the task is only going to occur once at any given time. This leads to the different types of parallelism. Thus in a singular task system parallelism may be useless and a single powerful processor could be used. But parallelism is so useful because most computing tasks require several tasks to be computed. In conclusion parallelism in computers is the way forward because it is not only the best way to get around the limitations created by manufacturing super fine chips but also the best way for solving complex problems. It is far easier (and cheaper) to manufacture several normal processors than one super processor. |

| [home] [top] |